以前 Kubeflow を MiniKF (Minikube ベースの VirtualBox イメージ) で導入しました。

その後 Ubuntu 19.10 のリリース時、Kubeflow が MicroK8s の Addon として導入できるようになるというアナウンスがありました。

MicroK8s は Snap で簡単にインストールできる Kubernetes 環境です。Addon として Dashboard、CoreDNS、Istio などを microk8s.enable コマンドで簡単に導入できます。

そして、つい先日の MicroK8s 1.17 - 11 December 2019 で Addon として追加された模様です。

New addon: kubeflow. Give it a try with microk8s.enable kubeflow.

MicroK8s なので Ubuntu 19.10 が必須というわけでもなさそうですが、環境を用意して導入してみました。

まず Snap で MicroK8s をインストール

$ sudo snap install microk8s --classic microk8s v1.17.0 from Canonical✓ installed

ユーザーを microk8s グループに追加してログインしなおし。

$ sudo usermod -a -G microk8s hoge

ステータスを確認。kubeflow は disable になっています。

$ microk8s.status --wait-ready microk8s is running addons: cilium: disabled dashboard: disabled dns: disabled fluentd: disabled gpu: disabled helm: disabled ingress: disabled istio: disabled jaeger: disabled juju: disabled knative: disabled kubeflow: disabled linkerd: disabled metallb: disabled metrics-server: disabled prometheus: disabled rbac: disabled registry: disabled storage: disabled

microk8s.kubectl を kubectl として実行できるよう alias を設定

$ sudo snap alias microk8s.kubectl kubectl Added: - microk8s.kubectl as kubectl

kubeflow を enable します。dns , storage など依存するアドオンも enable され最後に kubeflow のデプロイが開始されます。

Pod が大量に起動され、Service が Ready になるまでに 30分ほどかかりました。

$ microk8s.enable kubeflow

Enabling dns...

Enabling storage...

Enabling dashboard...

Enabling ingress...

Enabling rbac...

Enabling juju...

Deploying Kubeflow...

Kubeflow deployed.

Waiting for operator pods to become ready.

Waited 0s for operator pods to come up, 28 remaining.

Waited 15s for operator pods to come up, 27 remaining.

Waited 30s for operator pods to come up, 27 remaining.

Waited 45s for operator pods to come up, 27 remaining.

Waited 60s for operator pods to come up, 25 remaining.

Waited 75s for operator pods to come up, 21 remaining.

Waited 90s for operator pods to come up, 19 remaining.

Waited 105s for operator pods to come up, 18 remaining.

Waited 120s for operator pods to come up, 17 remaining.

Waited 135s for operator pods to come up, 15 remaining.

Waited 150s for operator pods to come up, 13 remaining.

Waited 165s for operator pods to come up, 13 remaining.

Waited 180s for operator pods to come up, 11 remaining.

Waited 195s for operator pods to come up, 10 remaining.

Waited 210s for operator pods to come up, 8 remaining.

Waited 225s for operator pods to come up, 4 remaining.

Waited 240s for operator pods to come up, 3 remaining.

Operator pods ready.

Waiting for service pods to become ready.

Congratulations, Kubeflow is now available.

The dashboard is available at https://localhost/

Username: admin

Password: XXXXXXXXXXXXXXXXXXXXXXX

To see these values again, run:

microk8s.juju config kubeflow-gatekeeper username

microk8s.juju config kubeflow-gatekeeper password

To tear down Kubeflow and associated infrastructure, run:

microk8s.disable kubeflow

kubeflow というnamespace が作られ、多くの deployment や service が作られています。0.5 の時よりかなり増えてる感じです。

$ kubectl get deploy,svc -n kubeflow NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/ambassador 1/1 1 1 19m deployment.apps/argo-controller 1/1 1 1 16m deployment.apps/argo-ui 1/1 1 1 19m deployment.apps/jupyter-controller 1/1 1 1 19m deployment.apps/jupyter-web 1/1 1 1 18m deployment.apps/katib-controller 1/1 1 1 18m deployment.apps/katib-manager 1/1 1 1 17m deployment.apps/katib-ui 1/1 1 1 17m deployment.apps/kubeflow-dashboard 1/1 1 1 17m deployment.apps/kubeflow-gatekeeper 1/1 1 1 17m deployment.apps/kubeflow-login 1/1 1 1 17m deployment.apps/kubeflow-profiles 1/1 1 1 17m deployment.apps/metacontroller 1/1 1 1 17m deployment.apps/metadata 1/1 1 1 15m deployment.apps/metadata-ui 1/1 1 1 14m deployment.apps/modeldb-backend 1/1 1 1 15m deployment.apps/modeldb-store 1/1 1 1 16m deployment.apps/modeldb-ui 1/1 1 1 15m deployment.apps/pipelines-api 1/1 1 1 15m deployment.apps/pipelines-persistence 1/1 1 1 15m deployment.apps/pipelines-scheduledworkflow 1/1 1 1 15m deployment.apps/pipelines-ui 1/1 1 1 14m deployment.apps/pipelines-viewer 1/1 1 1 14m deployment.apps/pytorch-operator 1/1 1 1 14m deployment.apps/tf-job-dashboard 1/1 1 1 15m deployment.apps/tf-job-operator 1/1 1 1 15m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ambassador ClusterIP 10.152.183.73 <none> 80/TCP 19m service/ambassador-operator ClusterIP 10.152.183.100 <none> 30666/TCP 19m service/argo-controller-operator ClusterIP 10.152.183.215 <none> 30666/TCP 19m service/argo-ui ClusterIP 10.152.183.55 <none> 8001/TCP 19m service/argo-ui-operator ClusterIP 10.152.183.134 <none> 30666/TCP 19m service/jupyter-controller-operator ClusterIP 10.152.183.104 <none> 30666/TCP 19m service/jupyter-web ClusterIP 10.152.183.191 <none> 5000/TCP 18m service/jupyter-web-operator ClusterIP 10.152.183.205 <none> 30666/TCP 19m service/katib-controller ClusterIP 10.152.183.200 <none> 443/TCP 18m service/katib-controller-operator ClusterIP 10.152.183.189 <none> 30666/TCP 18m service/katib-db ClusterIP 10.152.183.45 <none> 3306/TCP 18m service/katib-db-endpoints ClusterIP None <none> <none> 18m service/katib-db-operator ClusterIP 10.152.183.48 <none> 30666/TCP 18m service/katib-manager ClusterIP 10.152.183.222 <none> 6789/TCP 17m service/katib-manager-operator ClusterIP 10.152.183.167 <none> 30666/TCP 18m service/katib-ui ClusterIP 10.152.183.22 <none> 80/TCP 17m service/katib-ui-operator ClusterIP 10.152.183.199 <none> 30666/TCP 18m service/kubeflow-dashboard ClusterIP 10.152.183.237 <none> 8082/TCP 17m service/kubeflow-dashboard-operator ClusterIP 10.152.183.66 <none> 30666/TCP 18m service/kubeflow-gatekeeper ClusterIP 10.152.183.240 <none> 8085/TCP 17m service/kubeflow-gatekeeper-operator ClusterIP 10.152.183.2 <none> 30666/TCP 17m service/kubeflow-login ClusterIP 10.152.183.31 <none> 5000/TCP 17m service/kubeflow-login-operator ClusterIP 10.152.183.252 <none> 30666/TCP 17m service/kubeflow-profiles ClusterIP 10.152.183.239 <none> 8081/TCP 17m service/kubeflow-profiles-operator ClusterIP 10.152.183.23 <none> 30666/TCP 18m service/metacontroller ClusterIP 10.152.183.112 <none> 9999/TCP 17m service/metacontroller-operator ClusterIP 10.152.183.13 <none> 30666/TCP 18m service/metadata ClusterIP 10.152.183.164 <none> 8080/TCP 15m service/metadata-db ClusterIP 10.152.183.209 <none> 3306/TCP 16m service/metadata-db-endpoints ClusterIP None <none> <none> 16m service/metadata-db-operator ClusterIP 10.152.183.67 <none> 30666/TCP 16m service/metadata-operator ClusterIP 10.152.183.121 <none> 30666/TCP 15m service/metadata-ui ClusterIP 10.152.183.182 <none> 3000/TCP 14m service/metadata-ui-operator ClusterIP 10.152.183.6 <none> 30666/TCP 16m service/minio ClusterIP 10.152.183.213 <none> 9000/TCP 16m service/minio-endpoints ClusterIP None <none> <none> 16m service/minio-operator ClusterIP 10.152.183.136 <none> 30666/TCP 17m service/modeldb-backend ClusterIP 10.152.183.69 <none> 8085/TCP,8080/TCP 15m service/modeldb-backend-operator ClusterIP 10.152.183.94 <none> 30666/TCP 16m service/modeldb-db ClusterIP 10.152.183.155 <none> 3306/TCP 15m service/modeldb-db-endpoints ClusterIP None <none> <none> 15m service/modeldb-db-operator ClusterIP 10.152.183.56 <none> 30666/TCP 16m service/modeldb-store ClusterIP 10.152.183.20 <none> 8086/TCP 16m service/modeldb-store-operator ClusterIP 10.152.183.224 <none> 30666/TCP 17m service/modeldb-ui ClusterIP 10.152.183.7 <none> 3000/TCP 15m service/modeldb-ui-operator ClusterIP 10.152.183.130 <none> 30666/TCP 16m service/pipelines-api ClusterIP 10.152.183.43 <none> 8887/TCP,8888/TCP 15m service/pipelines-api-operator ClusterIP 10.152.183.34 <none> 30666/TCP 16m service/pipelines-db ClusterIP 10.152.183.53 <none> 3306/TCP 16m service/pipelines-db-endpoints ClusterIP None <none> <none> 16m service/pipelines-db-operator ClusterIP 10.152.183.32 <none> 30666/TCP 16m service/pipelines-persistence-operator ClusterIP 10.152.183.161 <none> 30666/TCP 15m service/pipelines-scheduledworkflow-operator ClusterIP 10.152.183.74 <none> 30666/TCP 15m service/pipelines-ui ClusterIP 10.152.183.68 <none> 3000/TCP,3001/TCP 14m service/pipelines-ui-operator ClusterIP 10.152.183.135 <none> 30666/TCP 15m service/pipelines-viewer ClusterIP 10.152.183.61 <none> 8001/TCP 14m service/pipelines-viewer-operator ClusterIP 10.152.183.25 <none> 30666/TCP 15m service/pytorch-operator-operator ClusterIP 10.152.183.208 <none> 30666/TCP 14m service/tf-job-dashboard ClusterIP 10.152.183.179 <none> 8080/TCP 15m service/tf-job-dashboard-operator ClusterIP 10.152.183.49 <none> 30666/TCP 15m service/tf-job-operator-operator ClusterIP 10.152.183.241 <none> 30666/TCP 15m

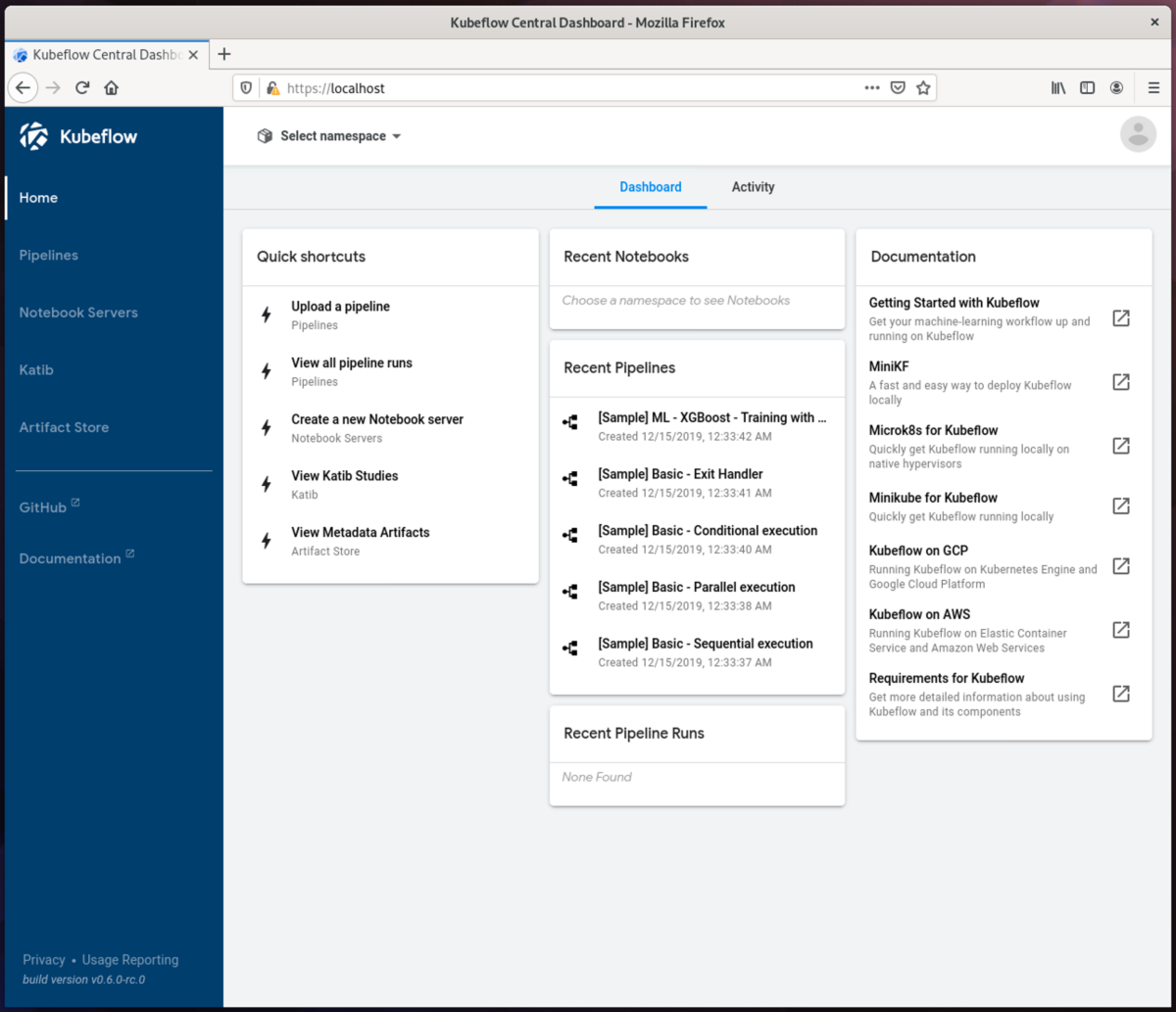

ブラウザで localhost に接続するとログイン画面が出るのでインストール完了時に表示された admin ユーザとパスワードでログインします。パスワードは microk8s.juju コマンドで再表示可能です。

$ microk8s.juju config kubeflow-gatekeeper password

おなじみの kubeflow の dashboard が表示されました。

v0.6.0-rc.0 が入ったようです。現在最新版は 0.7 で次は 1.0 になるみたいです。

kubeflow/ROADMAP.md at master · kubeflow/kubeflow · GitHub

ということで、MicroK8s の近い将来のリリースで Kubeflow 1.0 が入るようになるでしょう。

Kubeflow は導入障壁がまだ高いのですが、MicroK8s の Addon になるとかなり手軽になりますね。Kubeflow を利用する開発環境の構築にも良さそうです。今回は、GCP に 8 vCPU 30GB メモリという強めな VM を用意し GNOME と xrdp でリモートデスクトップ接続しました。Core i7 で 32GB メモリ程度の LInux マシンが用意できればけっこう使える環境が作れそうです。